Resource allocation

This topic describes container resource allocation logic, how you can configure resource limits, and troubleshooting for issues related to resource allocation.

- What are Kubernetes container requests? Containers are guaranteed to get requests. If a container requests a resource, Kubernetes only schedules it on a node that can give the container the requested resource.

- What are Kubernetes container limits? This defines the upper limit for resource consumption by a Kubernetes container. When the container uses more than the defined limit, Kubernetes evicts the pod.

For more information, go to the Kubernetes documentation on Kubernetes best practices: Resource requests and limits.

Build pod resource allocation

By default, resource requests are always set to the minimum, and additional resources are requested only as needed during build execution, depending on the resource request logic. Resources are not consumed after a step's execution is done. Default resource minimums (requests) and maximums (limits) are as follows:

| Component | Minimum CPU | Maximum CPU | Minimum memory | Maximum memory |

|---|---|---|---|---|

| Steps | 10m | 400m | 10Mi | 500Mi |

| Add on (execution-related steps on containers in Kubernetes pods) | 100m | 100m | 100Mi | 100Mi |

Override resource limits

For an individual step, you can use the Set Container Resources settings to override the maximum resources limits:

- Limit Memory: Maximum memory that the container can use. You can express memory as a plain integer or as a fixed-point number with the suffixes

GorM. You can also use the power-of-two equivalents,GiorMi. Do not include spaces when entering a fixed value. The default is500Mi. - Limit CPU: The maximum number of cores that the container can use. CPU limits are measured in CPU units. Fractional requests are allowed. For example, you can specify one hundred millicpu as

0.1or100m. The default is400m. For more information, go to Resource units in Kubernetes.

Set Container Resources settings don't apply to self-hosted VM or Harness Cloud build infrastructures.

To increase default resource limits across the board, you can contact Harness Support to enable the feature flag CI_INCREASE_DEFAULT_RESOURCES. This feature flag increases maximum CPU to 1000m and maximum memory to 3000Mi.

Resource request logic and calculations

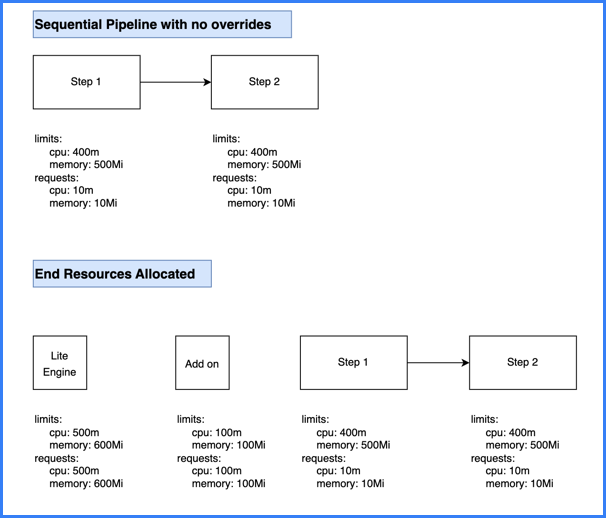

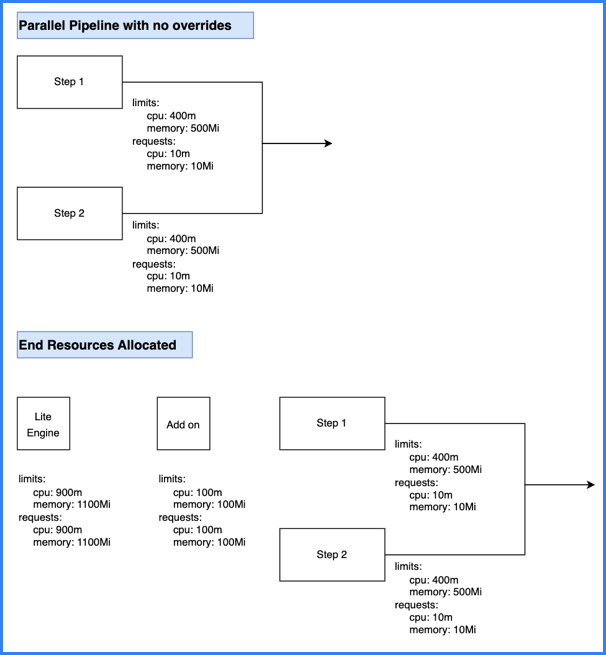

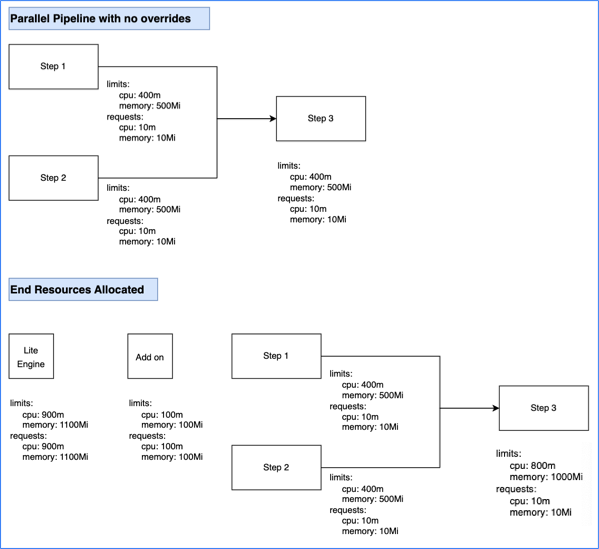

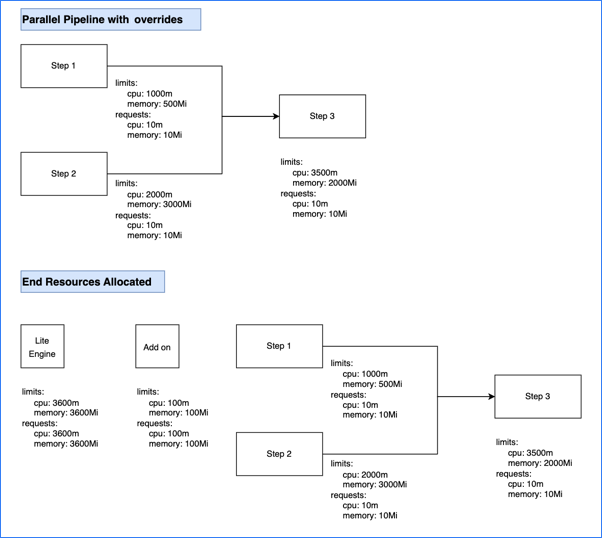

Limit and request values are based on steps being parallel or sequential. Parallel steps sum the resources for each parallel step, and sequential steps take the maximum resources needed amongst the sequential steps.

The total computed resource requirements are set as the limit and request values for the stage container. For example, if total CPU is 600m, then the stage container's memory limit and request values are both set to 600m. This ensures selection of appropriately-sized pods, and it avoids over- or under-utilization of resources. The stage container is also referred to as the Lite Engine.

Resource calculation examples

Calculation example #1: Sequential steps

Calculation example #2: Parallel steps

Calculation example #3: Sequential and parallel steps

Calculation example #4: Sequential and parallel steps with overrides

Calculation example #5: Step groups

Calculation example #6: Background steps

Troubleshooting

The following troubleshooting guidance addresses some issues you might experience with resource limits or pod evictions. For additional troubleshooting guidance, go to Troubleshoot CI.

CI pods appear to be evicted by Kubernetes autoscaling

Harness CI pods shouldn't be evicted due to autoscaling of Kubernetes nodes because Kubernetes doesn't evict pods that aren't backed by a controller object. However, if you notice either sporadic pod evictions or failures in the Initialize step in your Build logs, add the following annotation to your Kubernetes cluster build infrastructure settings:

"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"

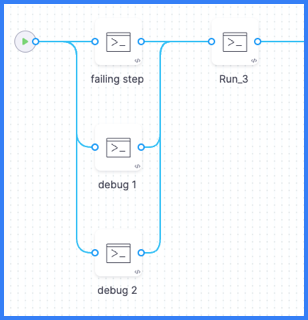

Use a parallel step to monitor failures

If you need to debug failures that aren't captured in the standard Build logs, you can add two Run steps to your pipeline that run in parallel with the failing step. The Run steps contain commands that monitor the activity of the failing step and generate logs that can help you debug the failing step.

In your pipeline's YAML, add the two debug monitoring steps, as shown in the following example, alongside the failing step, and group the three steps in parallel. Make sure the timeout is long enough to cover the time it takes for the failing step to fail; otherwise the debug steps will timeout before capturing the full logs for the failing step.

- parallel:

- step:

type: Run

name: failing step

...

- step:

type: Run

name: Debug monitor 1

identifier: debug_monitor_1

spec:

connectorRef: YOUR_DOCKER_CONNECTOR ## Specify your Docker connector's ID.

image: alpine ## Specify an image relevant to your build.

shell: Sh

command: top -d 10

timeout: 10m ## Allow enough time to cover the failing step.

- step:

type: Run

name: Debug monitor 2

identifier: debug_monitor_2

spec:

connectorRef: YOUR_DOCKER_CONNECTOR ## Specify your Docker connector's ID.

image: alpine ## Specify an image relevant to your build.

shell: Sh

command: |-

i=0

while [ $i -lt 10 ]

do

df -h

du -sh *

sleep 10

i=`expr $i + 1`

done

timeout: 10m ## Allow enough time to cover the failing step.

- step: ## Other steps are outside the parallel group.

type: Run

name: Run 3

...

After adding the debug monitoring steps, run your pipeline, and then check the debug steps' Build logs.

Your parallel group (under the -parallel flag) should only include the two debug monitoring steps and the failing step.

You can arrange steps in parallel in the Visual editor by dragging and dropping steps to rearrange them.

Docker Hub rate limiting

By default, Harness uses anonymous Docker access to connect to pull Harness images. If you experience rate limiting issues when pulling images, try one of these solutions:

- Use credentialed access, rather than anonymous access, to pull Harness CI images.

- Configure the default Docker connector to pull images from the public Harness GCR project instead of Docker Hub.

- Pull Harness images from your own private registry.

For instructions on each of these options, go to Connect to the Harness container image registry.